-

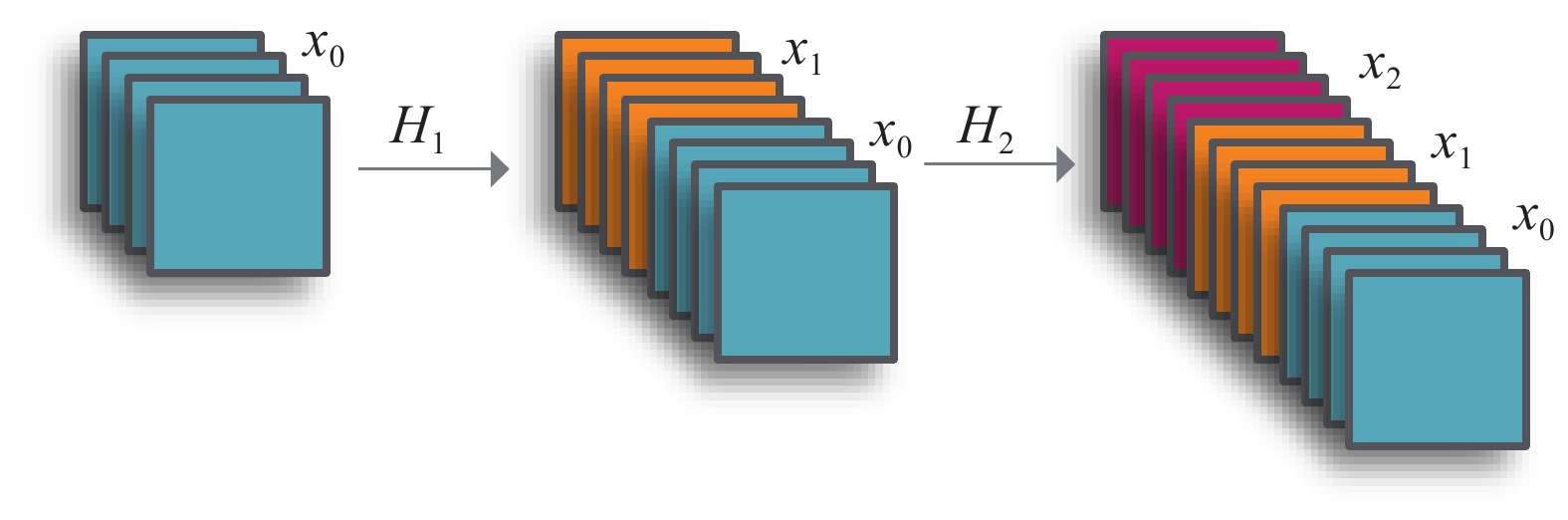

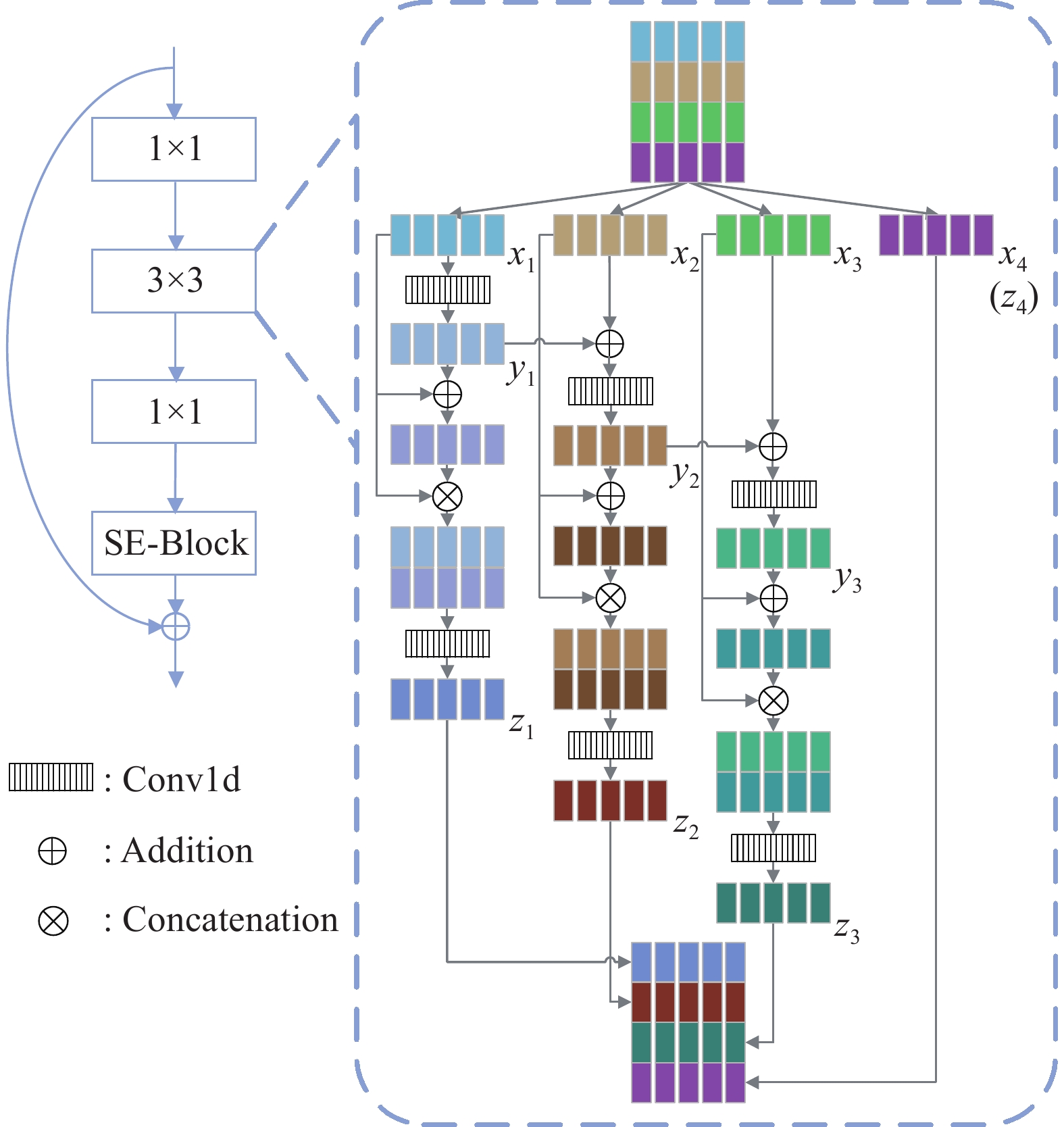

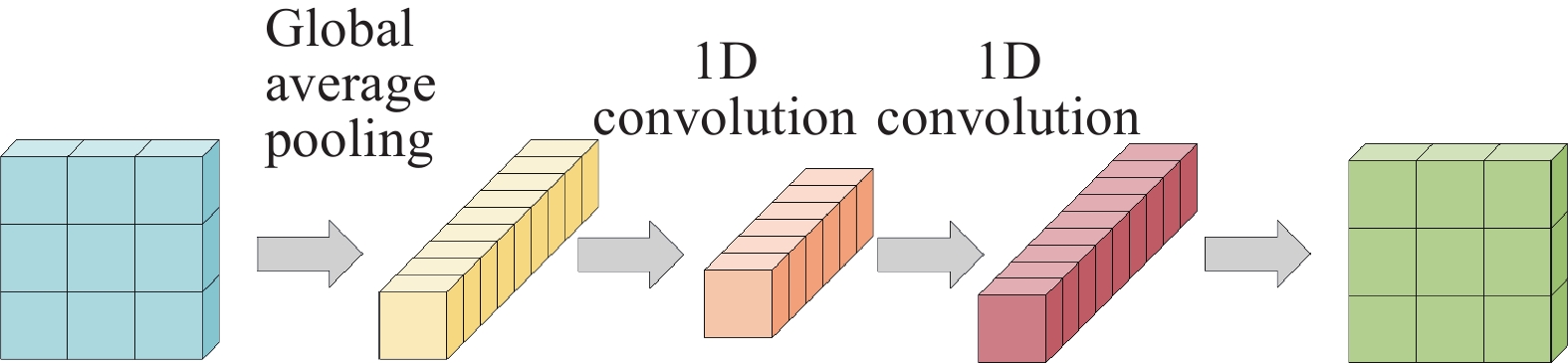

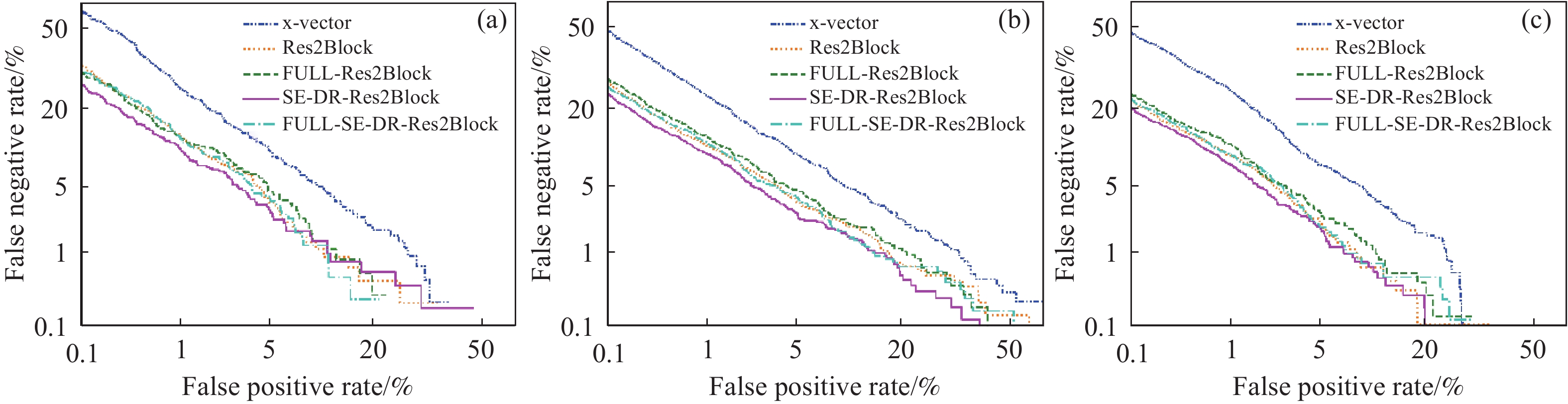

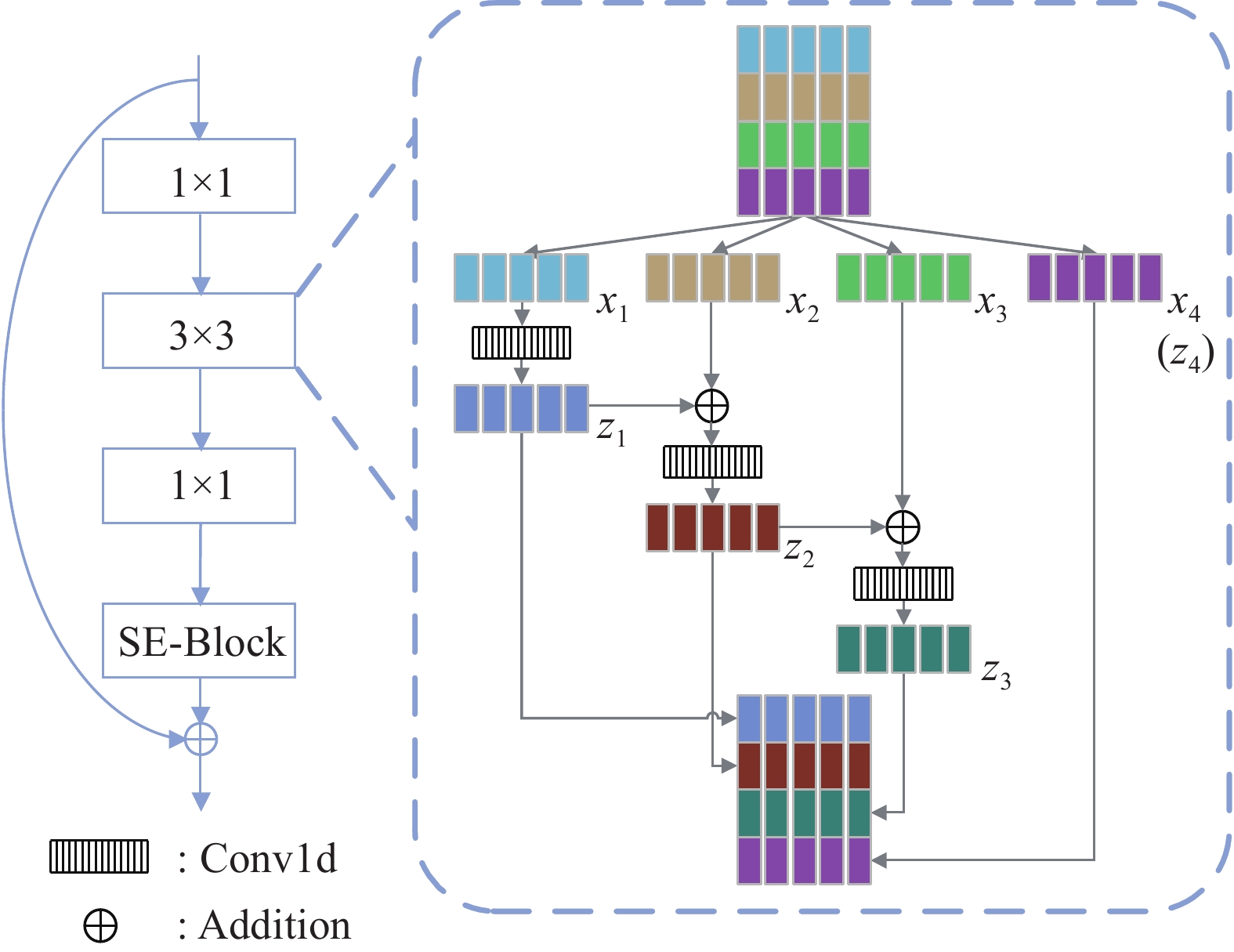

摘要: 針對聲紋識別領域中基于傳統Res2Net模型特征表達能力不足、泛化能力不強的問題,提出了一種結合稠密連接與殘差連接的特征提取模塊SE-DR-Res2Block(Sequeeze and excitation with dense and residual connected Res2Block). 首先,介紹了應用傳統Res2Block的ECAPA-TDNN(Emphasized channel attention, propagation and aggregation in time delay neural network)網絡結構和稠密連接及其工作原理;然后,為實現更高效的特征提取,采用稠密連接進一步實現特征的充分挖掘,基于SE-Block(Squeeze and excitation block)將殘差連接和稠密連接相結合,提出了一種更高效的特征提取模塊SE-DR-Res2Net. 該模塊以一種更細粒化的方式獲得不同生長速率和多種感受野的組合,從而獲取多尺度的特征表達組合并最大限度上實現特征重用,以實現對不同層特征的信息進行有效提取,獲取更多尺度的特征信息;最后,為驗證該模塊的有效性,基于不同網絡模型采用SE-Res2Block(Sequeeze and excitation Res2Block)、FULL-SE-Res2Block(Fully connected sequeeze and excitation Res2Block)、SE-DR-Res2Block、FULL-SE-DR-Res2Block(Fully connected sequeeze and excitation with dense and residual connected Res2Block),分別在Voxceleb1和SITW(Speakers in the wild)數據集開展了聲紋識別的研究. 實驗結果表明,采用SE-DR-Res2Block的ECAPA-TDNN網絡模型,最佳等錯誤率分別達到2.24%和3.65%,其驗證了該模塊的特征表達能力,并且在不同測試集上的結果也驗證了其具有良好的泛化能力.Abstract: Aiming at the problems of insufficient feature expression ability and weak generalization ability of the traditional Res2Net model in the field of voice print recognition, this paper proposes a feature extraction module known as the SE-DR-Res2Block, which combinedly uses dense connection and residual connection. The combination of low-semantic features with spatial information characteristics allows focusing more on detailed information and high-semantic information that concentrates on global information as well as abstract features. This can compensate for the loss of some detailed information caused by abstraction. First, the feature of each layer in the dense connection structure is derived from the feature output of all previous layers to realize feature reuse. Second, the structure and working principle of the ECAPA-TDNN network using traditional Res2Block is introduced. To achieve more efficient feature extraction, the dense connection is used to further realize full feature mining. Based on SE-block, a more efficient feature extraction module, SE-DR-Res2Net, is proposed by combining the residual join and dense links. As compared to the traditional SE-Block structures, the convolutional layers are used here instead of fully connected layers. Because they not only reduce the number of parameters needed for training but also allow weight sharing, thereby reducing overfitting. Therefore, effective extraction of feature information from different layers is essential for obtaining multiscale expression as well as maximizing the reuse of features. During the collection of more scale-specific feature information, a large number of dense structures can lead to a dramatic increase in parameters and computational complexity. By using partial residual structures instead of dense structures, we can effectively prevent the dramatic increase in parameter quantity while maintaining the performance to a certain extent. Finally, to verify the effectiveness of the module, SE-Res2block, Full-SE-Res2block, SE-DR-Res2block, and Full-SE-DR-Res2block are adopted based on the different network models. Voxceleb1 and SITW (speakers in the wild) datasets were used for Voxceleb1 and SITW, respectively. The performance comparison of Res2Net-50 models with different modules on the Voxceleb1 dataset shows that SE-DR-Res2Net-50 achieves the best equal error rate of 3.51%, which also validates the adaptability of this module on different networks. The usage of different modules on different networks, as well as experiments and analyses conducted on different datasets, were compared. The experimental results showed that the optimal equal error rates of the ECAPA-TDNN network model using SE-DR-Res2block had reached 2.24% and 3.65%, respectively. This verifies the feature expression ability of this module, and the corresponding results based on different test data sets also confirm its excellent generalization ability.

-

Key words:

- deep learning /

- voiceprint recognition /

- dense connection /

- residual connection /

- multiscale features

-

表 1 Voxceleb1測試集在不同Res2Net-50系統下的性能比較

Table 1. Performance comparison of the Voxceleb1 test set under different RES2Net-50 systems

System Parameters/ 106 EER/% minDCF (p = 0.1) (p = 0.01) (p = 0.001) x-vector[27] 4.19 0.212 0.391 0.512 Res2Net-50 24.50 3.73 0.205 0.381 0.485 FULL-Res2Net-50 24.50 3.91 0.210 0.385 0.481 SE-DR-Res2Net-50 35.19 3.51 0.197 0.374 0.473 FULL-SE-DR-Res2Net-50 35.19 3.70 0.206 0.379 0.478 表 2 Voxceleb1測試集在不同ECAPA-TDNN系統下的性能比較

Table 2. Performance comparison of the Voxceleb1 test set under different ECAPA-TDNN systems

System Parameters/106 EER/% minDCF (p = 0.1) (p = 0.01) (p = 0.001) x-vector[27] 4.19 0.212 0.391 0.512 Res2Block 14.73 2.49 0.132 0.269 0.312 FULL-Res2Block 14.73 2.51 0.145 0.296 0.372 SE-DR-Res2Block 16.71 2.24 0.120 0.245 0.300 FULL-SE-DR-Res2Block 16.71 2.37 0.137 0.280 0.364 表 3 SITW測試集在不同ECAPA-TDNN系統下的性能比較

Table 3. Performance comparison of the SITW test set under different ECAPA-TDNN systems

System Parameters/106 EER/% minDCF (p = 0.1) (p = 0.01) (p = 0.001) x-vector 6.92 0.357 0.567 0.832 Res2Block 14.73 3.91 0.179 0.348 0.551 FULL-Res2Block 14.73 4.0 0.179 0.349 0.529 SE-DR-Res2Block 16.71 3.65 0.175 0.334 0.509 FULL-SE-DR-Res2Block 16.71 3.83 0.179 0.337 0.513 表 4 SITW不同時長下的EER

Table 4. EER at different SITW duration

% System ERR <15 s 15–25 s 25–40 s x-vector 7.52 7.21 6.65 Res2Block 4.56 4.21 3.43 FULLRes2Block 4.75 4.30 3.52 SEDRRes2Block 4.06 3.82 3.30 FULLSEDRRes2Block 4.12 4.07 3.42 表 5 不同模型下的性能比較

Table 5. Performance comparison under different models

www.77susu.com<span id="fpn9h"><noframes id="fpn9h"><span id="fpn9h"></span> <span id="fpn9h"><noframes id="fpn9h"> <th id="fpn9h"></th> <strike id="fpn9h"><noframes id="fpn9h"><strike id="fpn9h"></strike> <th id="fpn9h"><noframes id="fpn9h"> <span id="fpn9h"><video id="fpn9h"></video></span> <ruby id="fpn9h"></ruby> <strike id="fpn9h"><noframes id="fpn9h"><span id="fpn9h"></span> -

參考文獻

[1] Zheng F, Li L T, Zhang H, et al. Overview of Voiceprint Recognition Technology and Applications. J Inf Secur Res, 2016, 2(1): 44.鄭方, 李藍天, 張慧等. 聲紋識別技術及其應用現狀. 信息安全研究, 2016, 2(1):44 [2] Hayashi V T, Ruggiero W V. Hands-free authentication for virtual assistants with trusted IoT device and machine learning. Sensors, 2022, 22(4): 1325 doi: 10.3390/s22041325 [3] Faundez-Zanuy M, Lucena-Molina J J, Hagmueller M. Speech watermarking: An approach for the forensic analysis of digital telephonic recordings[J/OL]. arXiv preprint (2022-03-12) [2022-09-19]. https://arxiv.org/abs/2203.02275 [4] Garain A, Ray B, Giampaolo F, et al. GRaNN: Feature selection with golden ratio-aided neural network for emotion, gender and speaker identification from voice signals. Neural Comput Appl, 2022, 34(17): 14463 doi: 10.1007/s00521-022-07261-x [5] Waghmare K, Gawali B. Speaker recognition for forensic application: A review. J Pos Sch Psychol, 2022, 6(3): 984 [6] Mittal A, Dua M. Automatic speaker verification systems and spoof detection techniques: review and analysis. Int J Speech Technol, 2022, 25: 105 doi: 10.1007/s10772-021-09876-2 [7] Burget L, Matejka P, Schwarz P, et al. Analysis of feature extraction and channel compensation in a GMM speaker recognition system. IEEE Trans Audio Speech Lang Process, 2007, 15(7): 1979 doi: 10.1109/TASL.2007.902499 [8] Bao H J, Zheng F. Combined GMM-UBM and SVM speaker identification system. J Tsinghua Univ Sci Technol, 2008(S1): 693鮑煥軍, 鄭方. GMM-UBM和SVM說話人辨認系統及融合的分析. 清華大學學報(自然科學版), 2008(S1):693 [9] Kenny P, Stafylakis T, Ouellet P, et al. JFA-based front ends for speaker recognition // 2014 IEEE International Conference on Acoustics, Speech and Signal Processing. Florence, 2014: 1705 [10] Cumani S, Plchot O, Laface P. On the use of i–vector posterior distributions in probabilistic linear discriminant analysis. IEEE/ACM Trans Audio Speech Lang Process, 2014, 22(4): 846 doi: 10.1109/TASLP.2014.2308473 [11] Variani E, Lei X, McDermott E, et al. Deep neural networks for small footprint text-dependent speaker verification // 2014 IEEE International Conference on Acoustics, Speech and Signal Processing. Florence, 2014: 4052 [12] Snyder D, Ghahremani P, Povey D, et al. Deep neural network-based speaker embeddings for end-to-end speaker verification // 2016 IEEE Spoken Language Technology Workshop. San Diego, 2016: 165 [13] Peddinti V, Povey D, Khudanpur S, et al. A time delay neural network architecture for efficient modeling of long tem-poral contexts // Sixteenth Annual Conference of the International Speech Communication Association. Dresden, 2015: 3214 [14] Okabe K, Koshinaka T, Shinoda K. Attentive statistics pooling for deep speaker embedding // Interspeech. Hyderabad, 2018: 2252 [15] Jiang Y H, Song Y, McLoughhlin I, et al. An effective deep embedding learning architecture for speaker verification // Interspeech. Graz, 2019: 4040 [16] Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convolutional networks // Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, 2017: 4700 [17] Zhou J F, Jiang T, Li Z, et al. Deep speaker embedding extraction with channel-wise feature responses and additive supervision softmax loss function // Interspeech. Graz, 2019: 2883 [18] Li Z, Zhao M, Li L, et al. Multi-feature learning with canonical correlation analysis constraint for text-independent speaker verification // 2021 IEEE Spoken Language Technology Workshop. Shenzhen, 2021: 330 [19] Desplanques B, Thienpondt J, Demuynck K. Ecapa-tdnn: emphasized channel attention, propagation and aggregation in tdnn based speaker verification // Interspeech. Shanghai, 2020: 3830 [20] Gao S H, Cheng M M, Zhao K, et al. Res2net: A new multi-scale backbone architecture. IEEE Trans Pattern Anal and Mach Intell, 2019, 43(2): 652 [21] Hu J, Shen L, Sun G. Squeeze-and-excitation networks // Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Salt Lake City, 2018: 7132 [22] Nagrani A, Chung J S, Zisserman A. Voxceleb: a large-scale speaker identification dataset[J/OL]. arXiv preprint (2018-05-30) [2022-09-19]. https://arxiv.org/abs/1706.08612 [23] McLaren M, Ferrer L, Castan D, et al. The speakers in the wild (SITW) speaker recognition database // Interspeech. San Francisco, 2016: 818 [24] Guo Z C, Yang Z, Ge Z R, et al. An endpoint detection method based on speech graph signal processing. J Signal Process, 2022, 38(4): 788 doi: 10.16798/j.issn.1003-0530.2022.04.013郭振超, 楊震, 葛子瑞, 等. 一種基于語音圖信號處理的端點檢測方法. 信號處理, 2022, 38(04):788 doi: 10.16798/j.issn.1003-0530.2022.04.013 [25] Zheng Y, Jiang Y X. Speaker clustering algorithm based on feature fusion. J Northeast Univ Nat Sci, 2021, 42(7): 952鄭艷, 姜源祥. 基于特征融合的說話人聚類算法. 東北大學學報(自然科學版), 2021, 42(7):952 [26] Deng J K, Guo J, Xue N N, et al. Arcface: Additive angular margin loss for deep face recognition // Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, 2019: 4690 [27] Chen Z G, Li P, Xiao R Q, et al. A multiscale feature extraction method for text-independent speaker recognition. J Electron Inf Technol, 2021, 43(11): 3266 doi: 10.11999/JEIT200917陳志高, 李鵬, 肖潤秋, 等. 文本無關說話人識別的一種多尺度特征提取方法. 電子與信息學報, 2021, 43(11):3266 doi: 10.11999/JEIT200917 -

下載:

下載: